Concept

"On Culture" emerged from a deeply personal confrontation with the arbitrary systems that attempt to categorize human worth. Growing up navigating identity, through understandings and struggles, as a Chinese immigrant moving to America for education, trying to hold onto my culture, my “Chinese-ness”, constantly being measured against contradictory standards of 'authenticity' from both sides, never quite fitting into the boxes others and myself tried to place me in. I became acutely aware of how dangerous and absurd these categorizations truly are. This project channels that frustration into a critical examination of eugenic ideologies and the modern systems that have inherited their logic, wrapped in new language but perpetuating the same violence.

For years, I lave been told not to put labels on myself, as to mitigate subconscious bias, and yet I find myself being told I was 'too American' in some contexts and 'too Chinese' in others, yet watching categorization systems try to flatten the complexity of my identity into checkboxes on forms. The work is a deliberate provocation, a satirical visualization that forces viewers to confront the ridiculousness of measuring human beings against arbitrary metrics of "purity" or "cultural resonance." It asks: what happens when we actually visualize these dehumanizing systems? What becomes visible when we see people reduced to numbers, plotted on orbital rings like celestial objects orbiting an undefined ideal?

We live in an era where categorization feels neutral, even benevolent—algorithms promise objectivity, data promises truth, metrics promise fairness. I sought for a justification to understand, or understanding to justify this elusive yet perpetual filter, and so I decided to create one myself.

My Determinants: A Satirical Framework

To expose the absurdity of eugenic categorization, I created my own ranking system—four metrics that sound scientific, objective, and reasonable, revealing how easily dehumanization disguises itself as measurement. Each determinant mirrors the language used by historical and contemporary systems that sort human worth.

Language and Articulation

This determinant measures how "properly" someone speaks, a proxy for education, class, and assimilation into dominant culture. It's the assumption that certain accents sound more intelligent, that multilingualism is exotic rather than normal, that losing your mother tongue to speak the colonizer's language is progress. Every person is scored on linguistic conformity, revealing how language becomes a weapon to separate "civilized" from "barbaric," "articulate" from "incomprehensible," those who belong from those who don't. The metric makes visible what every immigrant, every speaker of non-prestige dialects, every person told they "talk funny" already knows: language policing is violence dressed as etiquette.

Is the Blood Pure Genetically

The most openly violent determinant, this metric does exactly what eugenicists have always done: reduces human beings to their genetic material and judges that material against an imaginary standard of "purity." It asks the quiet part out loud: what does "pure" even mean when all humans share 99.9% of their DNA? When every population has migrated, mixed, and evolved? This score exposes the scientific racism underlying everything from Nazi breeding programs to modern ancestry testing companies promising ethnic percentages, as if identity were a math equation rather than lived experience.

Understanding / View of History

Who gets to decide which history matters? This metric scores individuals on their alignment with a "correct" interpretation of the past, as if there's a singular, pure historical narrative rather than countless perspectives shaped by power and survival. It's the same logic used to dismiss oral traditions, rewrite textbooks, and claim certain peoples have "no history worth studying." The score reveals the question underneath all historical gatekeeping: whose stories count, and who decides?

Cultural Background

The vaguest and therefore most insidious determinant, this metric attempts to quantify something unquantifiable: how closely someone's culture aligns with the undefined ideal at the center. It assumes culture is static rather than dynamic, that it can be measured rather than lived, that some cultures are inherently superior to others. This score captures the entire project of cultural imperialism in a single number—the claim that certain ways of being in the world are worth more than others, that assimilation is elevation, that diversity is only valuable when it's digestible to those in power.

Version 1

The first iteration of this project presents culture on an interactive 2D space. Drawing direct inspiration from diagrams of the solar system, cold, distant, though inexcusably orbital, it sets the stage for the metaphor, direct and clear. At its center sits a deliberate void labeled "PLACEHOLDER"—a pointed acknowledgment that there is no ideal, no center, no standard against which human beings should be measured. Surrounding this absence are dozens of profiles: some randomly generated individuals with rich, detailed lives, others historical figures infamous for their roles in systems of categorization and dehumanization.

Users can pan, zoom, and navigate through this space, encountering profiles scattered across orbital rings. Each person is scored on these four provocative metrics that echo both independent identity in a manner which parallels eugenic categorization.The randomization of these scores is intentional, demonstrating how meaningless and constructed these hierarchies are. A teacher from Lagos, a musician from Seoul, an engineer from São Paulo—all reduced to numbers, all positioned based on proximity to an empty ideal.

The interface allows both hovering for quick viewing and clicking to pin a profile, enabling deeper engagement with each person's story. This dual interaction mirrors the way we encounter people in life—quick judgments versus taking time to actually know someone—while the detailed profiles resist reduction, insisting on the complexity and dignity of each human being.

For this example, 5 random, fictional profiles (blue, above) are generated, and 5 historical, real examples (red, above) are generated. All profiles, whether blue or red, are scored against the arbitrary commentary, based through my four determinants, as follows, with blue on the left and red on the right;

Version 2

The second iteration of this project presents culture on an interactive 3D space. Reinterpreting the format and information display of the venn diagram, I attempt to combine it in essence with my metaphor for the solar system.

The Control Panel

The interface opens with a control panel that immediately implicates the user in the act of categorization. An input field requests an OpenAI API key, making visible the technological infrastructure required for algorithmic judgment. Two counters sit side by side: "Good Profiles" and "Bad Profiles," their labels carrying intentional discomfort. Who decides what makes a profile "good" or "bad"? The numbers are adjustable, forcing users to choose how many people to create on each side of an arbitrary moral divide. This is the violence of categorization made interactive—you must participate in sorting before you can see its consequences.

A "Generate Profiles" button initiates the creation process, summoning individuals into existence for the sole purpose of being measured. Once generated, users can toggle between viewing modes: "View 1: Categorization" shows the initial sorting system, while "Compare Mode" reveals the consequences of that sorting. "Tour Mode" offers a guided experience through the visualization, though even this educational mode can't escape the fundamental violence of the system it's explaining.

The piece opens with a cosmic metaphor: three "suns" representing different categorization regimes float in three-dimensional space:

Historical Eugenics, Algorithmic Categorization, & Genetic Screening

Orbiting these suns are profiles of both randomly generated individuals and historical perpetrators of mass violence, each assigned arbitrary scores across metrics like "genetic purity," "cultural background," and "understanding of history." Their positions in space and their colors—gradients blending the three suns' hues—are determined purely by these meaningless numbers.

Profile Comparison

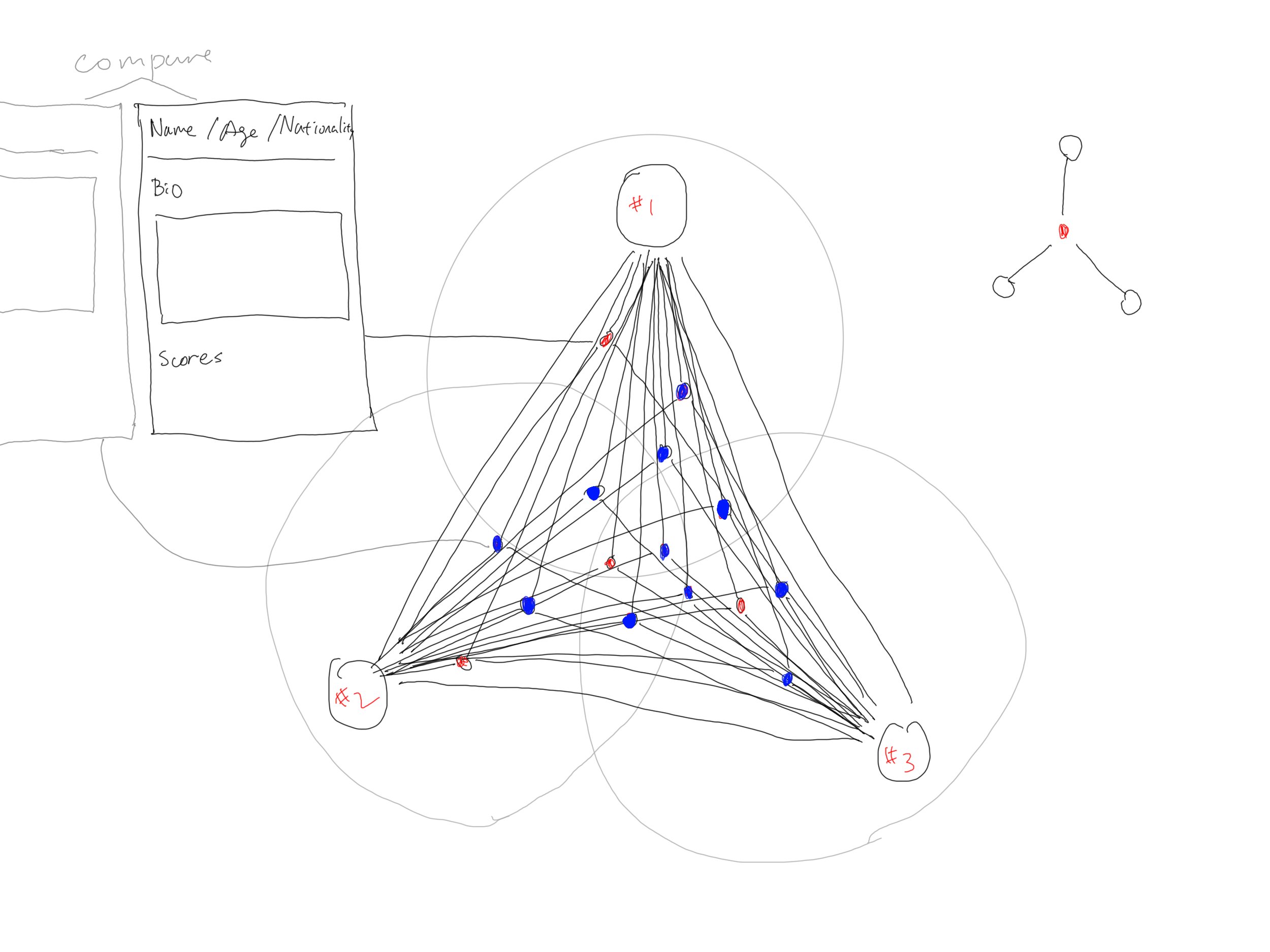

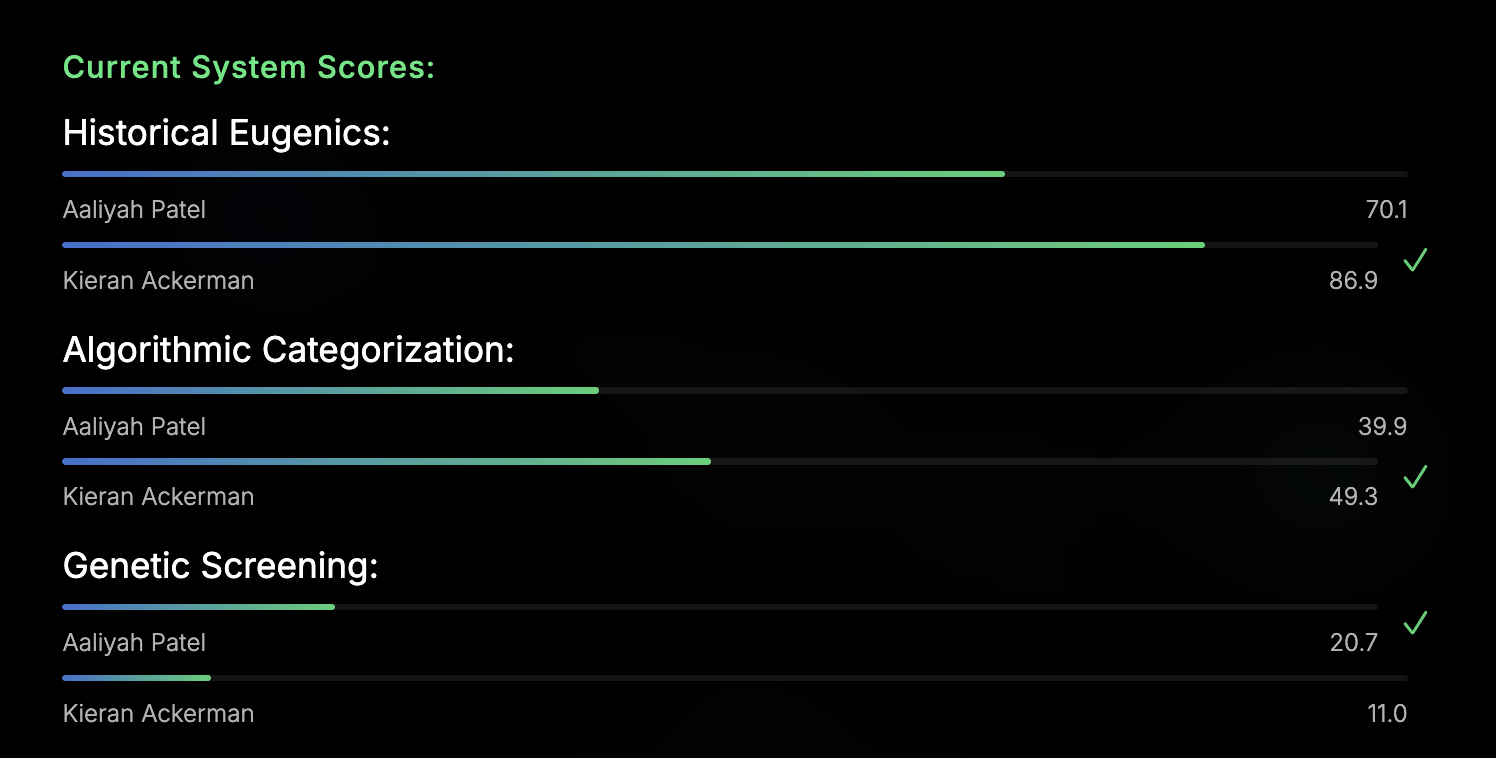

The comparison mode represents one of the project's most direct confrontations with categorization's violence. When activated, it places two individuals side by side, disregarding fictional or historical, random or evil, under the stark heading "Profile Comparison" in vivid magenta—a color that signals both visibility and alarm. Users see the full humanity of each person: Aaliyah Patel, 32, and Kieran Ackerman, 42:

Below these narratives, the system assigns scores. The three categorization systems—Historical Eugenics, Algorithmic Categorization, and Genetic Screening—each render their judgment through color-coded bars. Aaliyah scores 70.1 under Historical Eugenics while Kieran scores 86.9, a green checkmark appearing beside his higher number as if proximity to eugenic ideals were an achievement. The bars grow and shrink, green checkmarks appear and disappear, numbers fluctuate—but what do any of these measurements mean?

The comparison function forces the viewer to ask: why is Kieran "winning" under Historical Eugenics? What about Aaliyah's identity, background, or dreams makes her score lower? The system offers no explanations because none exist—these are arbitrary judgments masquerading as objective assessment. The visual design deliberately mimics data dashboards and comparison interfaces we encounter daily: credit scores, social media metrics, algorithm-driven recommendations. We're trained to trust bars and numbers, to accept that higher scores mean better. The comparison strips away that comfort by showing two complete human beings reduced to competing data points.

Most devastatingly, the comparison creates a hierarchy between people who should never be compared at all. A pediatric nurse and a veterinarian serve different communities with different skills; their work is incomparable in value. Yet the system insists on ranking them, on declaring one more aligned with imaginary standards than the other. This is the core violence of all categorization systems: they demand comparison where none should exist, create hierarchies where there should be recognition of diverse human worth. The comparison function doesn't just visualize this violence—it makes the user complicit in it by clicking to see who "scores higher."

The critical intervention occurs when viewers toggle to the "Consequences" view. The same suns transform, color palette inverted, into:

State Violence, Algorithmic Rejection, & Social Exclusion

Profiles smoothly animate to new positions representing their vulnerability to these systems' real-world harms. AI-generated narratives reveal specific consequences: a rejected visa application, denial of medical care, detention at a border. For the historical perpetrators—dictators and architects of genocide—the system applies poetic justice, subjecting them to the very categorization mechanisms they championed.

The project's symbolism operates on multiple levels. The strainism-influenced mechanics mirror how categorization systems position people in hierarchies of worthiness, while the smooth Catmull-Rom curves connecting profiles to suns visualize how these systems claim individuals, drawing them into their gravity. The color-blending reveals how people are never purely one category but exist at intersections, yet are still judged and harmed.

The correspondence is clear—categorization doesn't exist in a vacuum; it creates real harm. In this view, the historical perpetrators experience poetic justice, facing scenarios where their own systems of violence are turned against them. By including figures like Hitler and Pol Pot as mere data points with arbitrary scores, the work underscores categorization's fundamental absurdity—even genocidal dictators become interchangeable nodes when reduced to numerical metrics. Meanwhile, the generated profiles—people we've just met, whose dreams and hobbies we've learned—are assigned to real countries and specific tragic scenarios based on how modern categorization systems would target them.

Temporal Function & Historical Continuity

An optional timeline function, available only in View 2, allows users to scroll through different time periods from 1500 to 2000 in 100-year increments. This feature strips away historical context, placing all profiles on the same dehumanizing timeline. It reveals the uncomfortable truth that while the language changes—from "racial purity" to "risk assessment" to "predictive analytics"—the logic of categorization remains constant across centuries. The timeline function doesn't educate about historical differences; it collapses them, showing how systems of dehumanization simply rebrand while continuing to sort, exclude, and harm.

When opened, years from 1500, by intervals 100 years, are shown, up to 2000:

Symbolism in Design

Every technical choice in "On Culture" serves the conceptual critique. The use of AI-generated profiles is itself a meta-commentary: the same technology that powers modern categorization systems is deployed here to expose their absurdity. The orbital visualization references both solar system models of perfection and panopticon-style surveillance, where all subjects are visible and positioned relative to a central authority. The translucent rings suggest the permeability and arbitrariness of categories, while the starfield background evokes both the scale of humanity and the cold, distant gaze of systems that treat people as data points.

The profiles themselves are designed to resist reduction. Each includes not just scores but names, ages, occupations, hobbies, and dreams. A person is not their categorization score. The consequence scenarios in View 2 are researched and realistic, grounded in actual systems that exist today, ensuring the critique doesn't remain abstract but connects to lived experiences of harm.

The interactive navigation—zoom, pan, rotate through three-dimensional space—transforms passive observation into active exploration, forcing viewers to hunt for individual stories within the system's vast scope. This mirrors how real algorithmic systems obscure individual humanity behind interfaces and dashboards. The dual scoring system, displaying both the original four eugenic metrics and the three modern categorization types, reveals that despite superficial changes in language and technology, the logic of ranking human worth remains fundamentally unchanged.

Project Specific Q + A

How are modern algorithms similar to historical eugenics?

I want this parallel to be drawn from: a) combining both fictional profiles and historical characters; b) the fact that the fictional profiles and historical characters are, and importantly, can be on both sides, and having two of three sections for categorization be titled and considered (when displaying data) as Historical Eugenics and Algorithmic Categorization, drawing contrast and yet being direct with similarities since they both affect the profile on its axiom.

What happens when you categorization people without their human-ness?

I think this is one of the anchors that inspired my project at a fundamental level, that I have always found it scary how people can be distilled down to numbers, the sheer ruthless capabilities in condensing the dimensionalities of life into concepts of calculations. I want to show that without this humanity, this human-ness, we are void of any goals, love, or life. Thus, my visualization will mimic space, the largest known void where everything coexist in unparalleled distance, and forge this void.

How categorization systems minimizes people with complex, intersectional identities

In this project, when generating profiles, I want o have the profiles, though fictional, be as detailed as possible, as visceral, imaginable, and relevant as possible. I want to have profiles so detailed that when we read it, we are able to be zoomed-into that person’s human-ness, to understand how they can be so unique, and most importantly to prep the viewer for that zoom-out, when we replace this narrative with just a dot on a chart, a point in data, an element in a visual, or in this case for my zoom-out, all three.

What can we say is the actual harms that come from forms of categorization today?

Very obviously, discrimination, prejudice, preconceived bias, and this never-ending pursuit of fairness in a world categorized as unfair; but I am also truck to understand what they lead to, and how such implications negate and propel a self-sustaining cycle. I want this comparison to be shown to the visual change of my chart as the modes switch from the profiles’s base view to the consequences view.

Why do we recognize historical eugenics as obviously bad but might not see the same patterns in contemporary systems?

I have always been fascinated by how situational or context-dependant our interpretation could be, how subconsciously bias many thoughts become when churned out. It strikes me how this oxymoronic parallel is also distinctly prevalent in the issue my project aims to expose, and I find that this exposé can also push my commentary with this project. I want to play with time as a means of visualizing it, taking the same situations and put into different context, in one sense forcing a more logical conjecture done at face value, but also to adjust the underlying meaning behind each part, that that we the time shifts, out perspective shifts.